Kaustubh Sharma, Co-Founder and CEO at ambient voice provider MarianaAI, promises a “three-click process” to generate documentation from a patient...

Author - Andy Oram

![]() Andy is a writer and editor in the computer field. His editorial projects have ranged from a legal guide covering intellectual property to a graphic novel about teenage hackers. A correspondent for Healthcare IT Today, Andy also writes often on policy issues related to the Internet and on trends affecting technical innovation and its effects on society. Print publications where his work has appeared include The Economist, Communications of the ACM, Copyright World, the Journal of Information Technology & Politics, Vanguardia Dossier, and Internet Law and Business. Conferences where he has presented talks include O'Reilly's Open Source Convention, FISL (Brazil), FOSDEM (Brussels), DebConf, and LibrePlanet. Andy participates in the Association for Computing Machinery's policy organization, named USTPC, and is on the editorial board of the Linux Professional Institute.

Andy is a writer and editor in the computer field. His editorial projects have ranged from a legal guide covering intellectual property to a graphic novel about teenage hackers. A correspondent for Healthcare IT Today, Andy also writes often on policy issues related to the Internet and on trends affecting technical innovation and its effects on society. Print publications where his work has appeared include The Economist, Communications of the ACM, Copyright World, the Journal of Information Technology & Politics, Vanguardia Dossier, and Internet Law and Business. Conferences where he has presented talks include O'Reilly's Open Source Convention, FISL (Brazil), FOSDEM (Brussels), DebConf, and LibrePlanet. Andy participates in the Association for Computing Machinery's policy organization, named USTPC, and is on the editorial board of the Linux Professional Institute.

This unique video presents a far-ranging conversation with Nio Queiro, President of The Queiro Group. Topics include changes in revenue cycle management (RCM)...

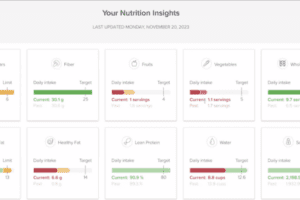

This series of articles has covered the ways that digital technologies contribute to weight loss. To round out the series, this final article covers general...

This article is part of a series on today’s philosophies regarding weight change and how digital interventions can help. In this article, we’ll...

Internet-based tracking and coaching are being employed wherever health becomes a matter of lifestyle. Technologies can reach into every detail of a...

Independent clinical practices are in danger everywhere: if they don’t go out of business, large hospital chains tend to pipette them up. Bill Lucchini...

The first article in this series laid out what we know about body weight and obesity today. The rest of the Lean Digital series will look at some contributions...

Is anyone not obsessed with weight? The health care field certainly is. Researchers have found ties between high body weight and an oversized list of unhealthy...

Payers are always wary about funding new treatments for illnesses and conditions: When millions of dollars are at stake, payers want demonstrated improvements...

Amazing how a trivial detail such as a postal address can cause so many headaches and so much waste. But in health care, a lack of address standardization is a...